vLLM inference#

vLLM is a library designed for efficient serving of large language models (LLMs). It provides high serving throughput and efficient attention key-value memory management using PagedAttention and continuous batching. It seamlessly integrates with a variety of LLMs, such as Llama, OPT, Mixtral, StableLM, and Falcon.

This document demonstrates how to build an LLM application using BentoML and vLLM.

All the source code in this tutorial is available in the BentoVLLM GitHub repository.

Prerequisites#

Python 3.8+ and

pipinstalled. See the Python downloads page to learn more.You have a basic understanding of key concepts in BentoML, such as Services. We recommend you read Quickstart first.

If you want to test the project locally, you need a Nvidia GPU with least 16G VRAM.

(Optional) We recommend you create a virtual environment for dependency isolation. See the Conda documentation or the Python documentation for details.

Install dependencies#

Clone the project repository and install all the dependencies.

git clone https://github.com/bentoml/BentoVLLM.git

cd BentoVLLM/mistral-7b-instruct

pip install -r requirements.txt && pip install -f -U "pydantic>=2.0"

Create a BentoML Service#

Define a BentoML Service to customize the serving logic of your lanaguage model, which uses vllm as the backend option. You can find the following example service.py file in the cloned repository.

Note

This example Service uses the model mistralai/Mistral-7B-Instruct-v0.2. You can choose other models in the BentoVLLM repository or any other model supported by vLLM based on your needs.

import uuid

from typing import AsyncGenerator

import bentoml

from annotated_types import Ge, Le

from typing_extensions import Annotated

from bentovllm_openai.utils import openai_endpoints

MAX_TOKENS = 1024

PROMPT_TEMPLATE = """<s>[INST]

You are a helpful, respectful and honest assistant. Always answer as helpfully as possible, while being safe. Your answers should not include any harmful, unethical, racist, sexist, toxic, dangerous, or illegal content. Please ensure that your responses are socially unbiased and positive in nature.

If a question does not make any sense, or is not factually coherent, explain why instead of answering something not correct. If you don't know the answer to a question, please don't share false information.

{user_prompt} [/INST] """

MODEL_ID = "mistralai/Mistral-7B-Instruct-v0.2"

@openai_endpoints(served_model=MODEL_ID)

@bentoml.service(

name="mistral-7b-instruct-service",

traffic={

"timeout": 300,

},

resources={

"gpu": 1,

"gpu_type": "nvidia-l4",

},

)

class VLLM:

def __init__(self) -> None:

from vllm import AsyncEngineArgs, AsyncLLMEngine

ENGINE_ARGS = AsyncEngineArgs(

model=MODEL_ID,

max_model_len=MAX_TOKENS

)

self.engine = AsyncLLMEngine.from_engine_args(ENGINE_ARGS)

@bentoml.api

async def generate(

self,

prompt: str = "Explain superconductors like I'm five years old",

max_tokens: Annotated[int, Ge(128), Le(MAX_TOKENS)] = MAX_TOKENS,

) -> AsyncGenerator[str, None]:

from vllm import SamplingParams

SAMPLING_PARAM = SamplingParams(max_tokens=max_tokens)

prompt = PROMPT_TEMPLATE.format(user_prompt=prompt)

stream = await self.engine.add_request(uuid.uuid4().hex, prompt, SAMPLING_PARAM)

cursor = 0

async for request_output in stream:

text = request_output.outputs[0].text

yield text[cursor:]

cursor = len(text)

This script mainly contains the following two parts:

Constant and template

MAX_TOKENSdefines the maximum number of tokens the model can generate in a single request.PROMPT_TEMPLATEis a pre-defined prompt template that provides interaction context and guidelines for the model.

A BentoML Service named

VLLM. The@bentoml.servicedecorator is used to define theVLLMclass as a BentoML Service, specifying timeout and GPU.The Service initializes an

AsyncLLMEngineobject from thevllmpackage, with specified engine arguments (ENGINE_ARGS). This engine is responsible for processing the language model requests.The Service exposes an asynchronous API endpoint

generatethat acceptspromptandmax_tokensas input.max_tokensis annotated to ensure it’s at least 128 and at most MAX_TOKENS. Inside the method:The prompt is formatted using

PROMPT_TEMPLATEto enforce the model’s output to adhere to certain guidelines.SamplingParamsis configured with themax_tokensparameter, and a request is added to the model’s queue usingself.engine.add_request. Each request is uniquely identified using a uuid.The method returns an asynchronous generator to stream the model’s output as it becomes available.

Note

This Service uses the @openai_endpoints decorator to set up OpenAI-compatible endpoints (chat/completions and completions). This means your client can interact with the backend Service (in this case, the VLLM class) as if they were communicating directly with OpenAI’s API. In addition, it is also possible to generate structured output like JSON using the endpoints.

This is made possible by this utility, which does not affect your BentoML Service code, and you can use it for other LLMs as well.

See the OpenAI-compatible endpoints tab below for interaction details.

Run bentoml serve in your project directory to start the Service.

$ bentoml serve .

2024-01-29T13:10:50+0000 [INFO] [cli] Starting production HTTP BentoServer from "service:VLLM" listening on http://localhost:3000 (Press CTRL+C to quit)

The server is active at http://localhost:3000. You can interact with it in different ways.

curl -X 'POST' \

'http://localhost:3000/generate' \

-H 'accept: text/event-stream' \

-H 'Content-Type: application/json' \

-d '{

"prompt": "Explain superconductors like I'\''m five years old",

"max_tokens": 1024

}'

import bentoml

with bentoml.SyncHTTPClient("http://localhost:3000") as client:

response_generator = client.generate(

prompt="Explain superconductors like I'm five years old",

max_tokens=1024

)

for response in response_generator:

print(response)

The @openai_endpoints decorator provides OpenAI-compatible endpoints (chat/completions and completions) for the Service. To interact with them, simply set the base_url parameter as the BentoML server address in the client.

from openai import OpenAI

client = OpenAI(base_url='http://localhost:3000/v1', api_key='na')

# Use the following func to get the available models

client.models.list()

chat_completion = client.chat.completions.create(

model="mistralai/Mistral-7B-Instruct-v0.2",

messages=[

{

"role": "user",

"content": "Explain superconductors like I'm five years old"

}

],

stream=True,

)

for chunk in chat_completion:

# Extract and print the content of the model's reply

print(chunk.choices[0].delta.content or "", end="")

See also

These OpenAI-compatible endpoints support vLLM extra parameters. For example, you can force the chat/completions endpoint to output a JSON object by using guided_json:

from openai import OpenAI

client = OpenAI(base_url='http://localhost:3000/v1', api_key='na')

# Use the following func to get the available models

client.models.list()

json_schema = {

"type": "object",

"properties": {

"city": {"type": "string"}

}

}

chat_completion = client.chat.completions.create(

model="mistralai/Mistral-7B-Instruct-v0.2",

messages=[

{

"role": "user",

"content": "What is the capital of France?"

}

],

extra_body=dict(guided_json=json_schema),

)

print(chat_completion.choices[0].message.content) # Return something like: {"city": "Paris"}

If your Service is deployed with protected endpoints on BentoCloud, you need to set the environment variable OPENAI_API_KEY to your BentoCloud API key first.

export OPENAI_API_KEY={YOUR_BENTOCLOUD_API_TOKEN}

You can then use the following line to replace the client in the above code snippet. Refer to Obtain the endpoint URL to retrieve the endpoint URL.

client = OpenAI(base_url='your_bentocloud_deployment_endpoint_url/v1')

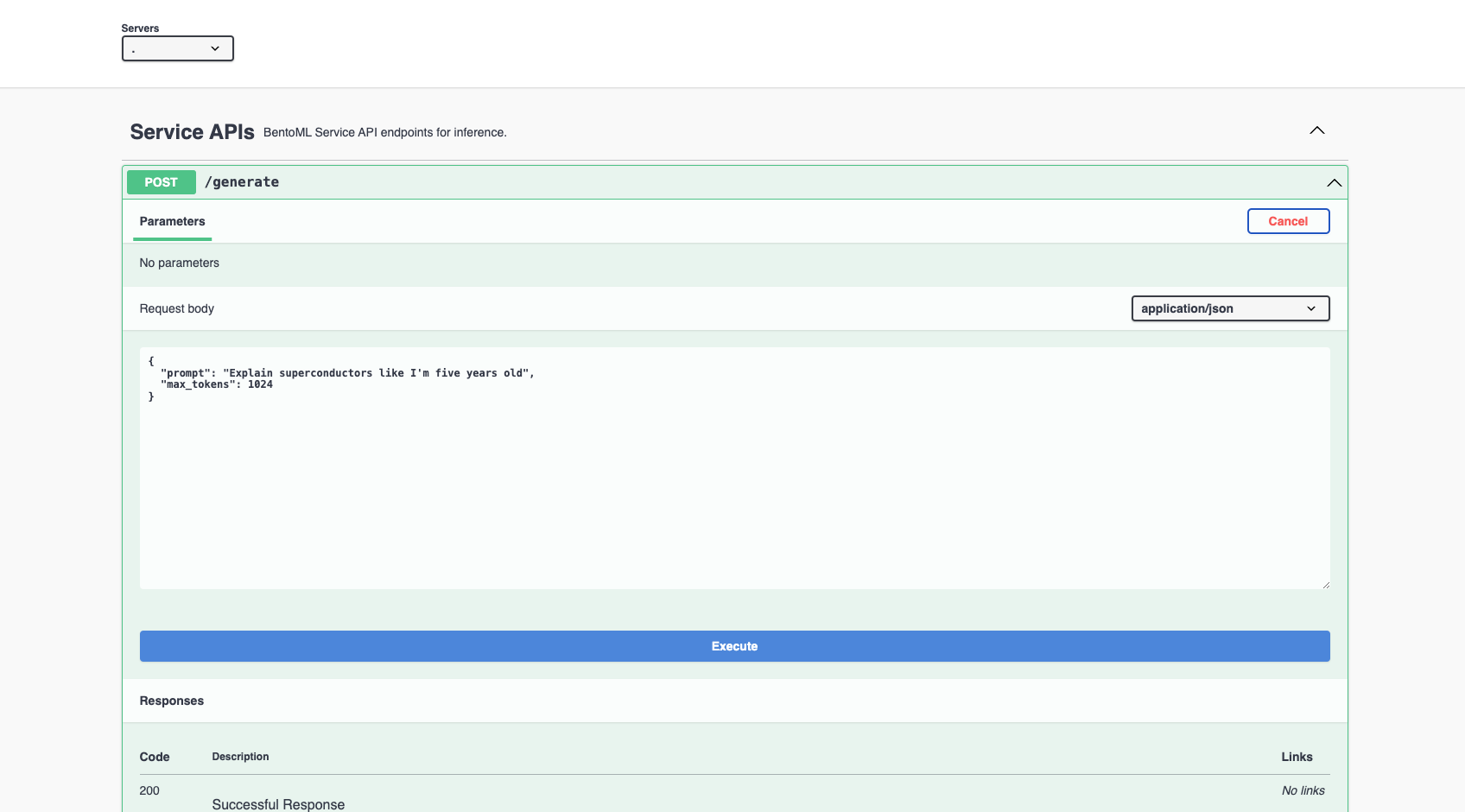

Visit http://localhost:3000, scroll down to Service APIs, and click Try it out. In the Request body box, enter your prompt and click Execute.

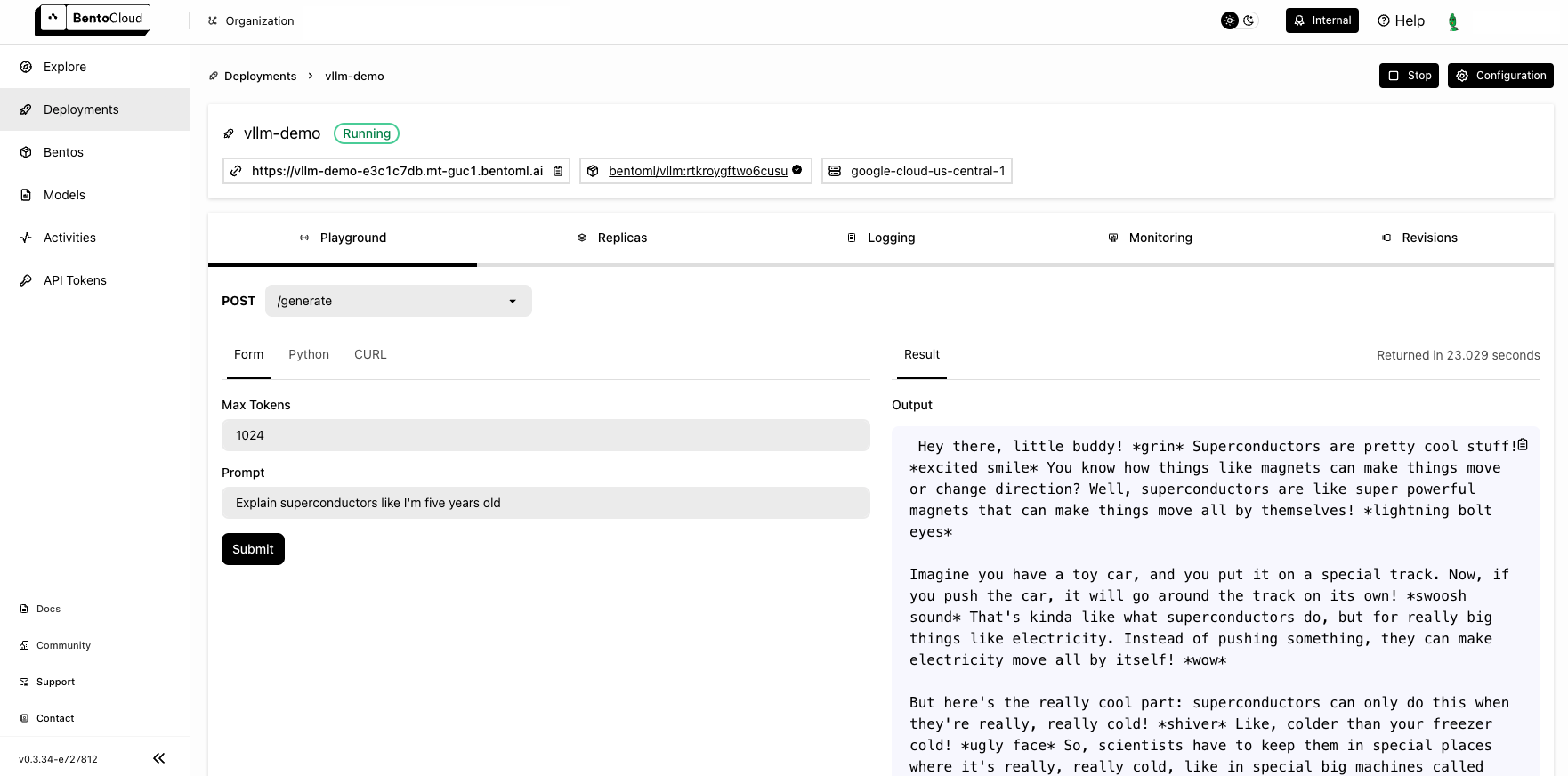

Deploy to BentoCloud#

After the Service is ready, you can deploy the project to BentoCloud for better management and scalability. Sign up for a BentoCloud account and get $10 in free credits.

First, specify a configuration YAML file (bentofile.yaml) to define the build options for your application. It is used for packaging your application into a Bento. Here is an example file in the project:

service: "service:VLLM"

labels:

owner: bentoml-team

stage: demo

include:

- "*.py"

- "bentovllm_openai/*.py"

python:

requirements_txt: "./requirements.txt"

Create an API token with Developer Operations Access to log in to BentoCloud, then run the following command to deploy the project.

bentoml deploy .

Once the Deployment is up and running on BentoCloud, you can access it via the exposed URL.

Note

For custom deployment in your own infrastructure, use BentoML to generate an OCI-compliant image.